News Round-Up

25 April 2024

by Will Jones

My BBC Complaint About Chris Packham’s Daily Sceptic Slur

25 April 2024

by Toby Young

A.V. Dicey Did Not Foresee the Gender Recognition Act

25 April 2024

My BBC Complaint About Chris Packham’s Daily Sceptic Slur

25 April 2024

by Toby Young

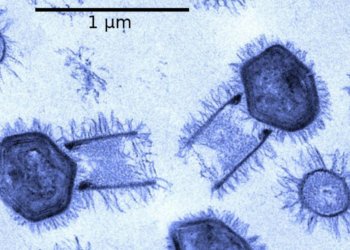

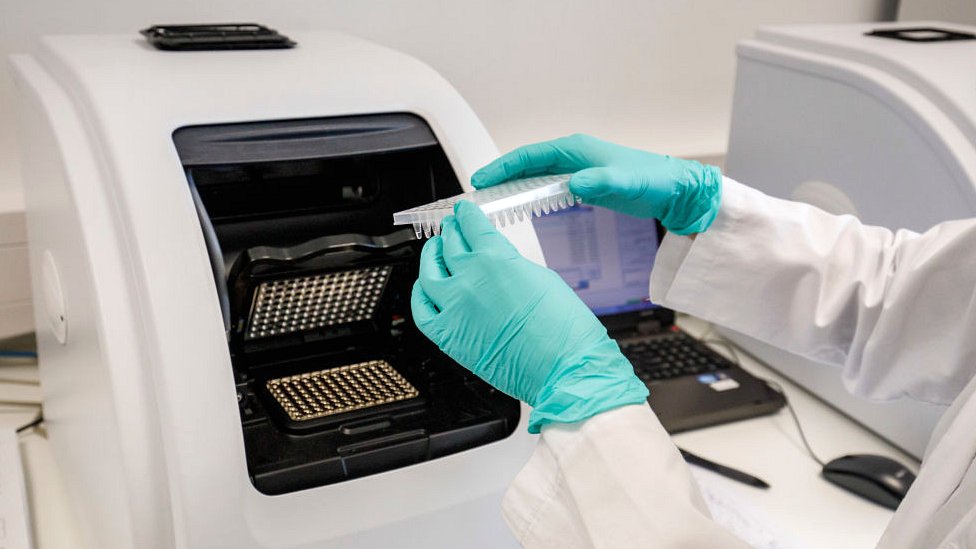

Another Clue Pointing to an American Origin of the Virus

25 April 2024

by Will Jones

Eastern Europe is Showing Britain Up on Free Speech

25 April 2024

by Štěpán Hobza

Transgenderism, Social Media – and Aliens

25 April 2024